Noisebridge

| Noisebridge | About | Visit | 272 | Manual | Contact | Guilds | Resources | Events | Projects | 5MoF | Meetings | Donate | (Edit) |

Noisebridge is a fun space for sharing, creation, collaboration, research, development, mentoring, and of course, learning. Come visit, work, and use our equipment free (but please donate when you can!)

Noisebridge is also more than a physical space; it's a community with roots in hackerspaces extending around the world.

Goto 272 Capp

Visit Noisebridge Hackerspace at:OSM GMap

2 blocks from 16th Mission BART

Watch Video Tour March 2023

Noisebridge is open in-person again and you can also join us remotely online!

Accessibility: Ground floor is wheelchair accessible with resources, upstairs is not.

Hours: We are open in-person during these hours or when somebody is around:

M 3-9pm T 6-10pm W 4-9pm T 4-8pm F 12pm-12am SAT 12-5pm SUN 12-4pm

Events and Classes

events are haphazardly cross-posted on Meetup, the Discord, and Google Calender here

Some noisebridger's have also started hosting Events from a calendar at https://noisebridge.today/, and new (additional) associated gCal.

Key:

W: Weekly |

1st 2nd 3rd 4th: Certain weeks |

-2nd: Except certain weeks |

S: Streaming |

event: caution maybe dead |

event: management of space

Upcoming Events

- Friday, May 31 - SAN FRANCISCO WRITERS WORKSHOP Fundraiser for Noisebridge! Event: 7-9pm (set-up starting at 5:30pm)

Mondays

| Tags | Time | Title | Description |

|---|---|---|---|

| W | 7:00pm | Meetups/Infra | Self-hosting, rough consensus, & running code. Find upcoming sessions on Meetup or in #meetup-infra on Discord. |

| W | 7:00pm | PyClass | A complete introductory Python course. Classes are held regularly. Find upcoming sessions on Meetup or in #python on Discord. (Returned! Mar '24) |

| W | you-o-clock | TRASH NIGHT | Please take out all three large trash bins!! They are on the patio. |

| W | |||

| W |

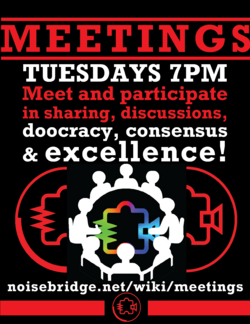

Tuesdays!

| Tags | Time | Title | Description |

|---|---|---|---|

| W S | 7:00pm | Noisebridge Weekly Meeting | (In person & online via Jitsi) - Introduce new people and events, joining, announcements, discussions, and consensus. Come express what you think about what's going on with your space! |

| W | 7:00pm | San Francisco Writers Workshop | Free drop-in writers workshop, get feedback and critique! Located on the first floor hackitorium. |

| W | |||

| 2nd 4nd | 6:00pm | Numerati SF | Hacking the Stock Market with AI/ML. Every other Tuesday. Please RSVP on Meetup. |

Wednesdays

| Tags | Time | Title | Description |

|---|---|---|---|

| 3rd | 3:30pm - 5:30pm | Zinemakers meetup | Monthly gathering of zine and book and comic creators, to share ideas and work on projects. Newbies welcome! Confirm with Meetup |

| 1st | 7:00pm - 8:30pm | Conflict Resolution | We do our best to address pressing issues, mitigate conflicts, be excellent to each other. |

| 2nd 4nd | 6:00pm - 7:00pm | Bike Psych! | Time and space to talk about transit related projects Confirm with Meetup |

| W S | 7:00pm - 9:00pm | Machine Learning AI and RL Meetup | Weekly gathering of AI enthusiasts discussing cool things happening in the field and ongoing projects / learning tracks |

| 1st | 8:00pm | Woodhacking Wednesday | "Have a woodworking project in mind but you don't know where to get started? This is a good time to come ask questions, get some help, and ideas from other woodworkers. Everyone is welcome..." Confirm dates with Meetup

|

Thursdays

| Tags | Time | Title | Description |

|---|---|---|---|

| W S | 6:00pm - 8:00pm | Gamebridge | game development mentoring & coworking meetup for gamedev beginners and indies alike. |

| W S | |||

| 2nd 4th | 6:00pm - 8:00pm | Advanced Geometry SF | An event for people who would like to study/teach advanced topics in geometry. |

| 3rd S | 8:00pm - 9:00pm | Five Minutes of Fame a.k.a. 5MoF | Ten 5min talks in an hour, on any topic |

| 4th S | 8:00pm - 10:30pm | Resident Electronic Music | An electronic music open mic! (note: Nov/Dec pushed to Dec 10th, 6pm setup) |

| 4th | 9:00pm | Queer Game Developers Meetup! | "Here you will find other queer game devs, game-making enthusiasts, and be a part of a community that welcomes everyone who is interested in creating games." Please register on Eventbrite. See Meetup for more info. |

| W | you-o-clock | TRASH NIGHT | Please take out all three large trash bins!! They are on the patio. |

Fridays

| Tags | Time | Title | Description |

|---|---|---|---|

| W | 2:00pm - 5:00pm | Hack on Noisebridge! | a good open time for cleaning 'n re-organizing the physical space. See #totally-secret-cabal-of-space-organizers on the Discord |

| Brewery meetup - brewing & tasting days | Come learn to brew mead with Eliot and Andy! Check the Meetup for dates or check in #brewing on Discord. |

Saturdays

| Tags | Time | Title | Description |

|---|---|---|---|

| W | 9:30am - 1:00am | Hack on Noisebridge! | cleaning, physical organizing and re-organizing, donuts. See #totally-secret-cabal-of-space-organizers! on discord |

| W | 2:00pm - 6:00pm | Free Code Camp | with |

| 3rd | 2:00pm - 6:00pm | Godot Meetup | Gamedev workshop & networking for users of the Godot game engine. We have two meetups this March around GDC: March 16th and March 23rd! |

| 4th | 1:00pm - 3:00pm | Building Guitar Pedals Workshop | Come build guitar pedals or other electronic music equipment! Look for the #pedal-building channel under #events in the discord. Check the Meetup for more info and confirmed dates. |

Sundays

| Tags | Time | Title | Description |

|---|---|---|---|

| W | 2:00pm - 4:00pm | Laser Cutter training | Get certified to use the laser cutter |

| 2nd | 2:00pm - 3:00pm | Fabrication 101 2nd Shop Sundays | class on safety and basic techniques |

| W | 6:00pm - 7:15pm | Noisebridge 15th Anniversary Planning Meeting | planning for Noisebridge 15, any/everyone is welcome to come and help with planning.. etc. |

| W | 7:30pm - 10:00pm | Self-Driving Flying Car Meetup training | class, discussion, and meetup on flying cars |

| 2nd S | 2:00pm - 4:00pm | BAHA: Bay Area Hackers' Association | Security Hacking Meeting 2nd Sundays at 272 and via Jitsi |

Other Bay Area Consortium of Hackerspaces Events

- Queerious Labs is open during limited times, check it's status

- SudoRoom: Women & Non-Binary Coding Mondays 7-9pm

- SudoRoom: Hardware Hacking Tuesdays 7-10pm

Anyone can participate in a class or workshop at Noisebridge! No membership or payment is required!

Photos

|

You are standing at Noisebridge Hackerspace at 272 Capp Street. A gate leads to a patio. Behind the fence are a roll up door and a front door. You see a Noisebridge Sign and trash bins. |

Info

Intro Poster: How we explain ourselves to new visitors.

Resources: Stuff in the space -- computer network & servers, project areas, tools, bulk orders from Digikey/McMaster/Mouser.

The Noisebridge Manual: A compendium of wiki knowledge detailing how Noisebridge works.

Safety in the Space: What to do in case of an emergency.

Press Coverage: mentions of Noisebridge in the media (both blog and dead tree).

Identity: A collection of resources revolving around our identity and logo.

The Neighborhood: What's in the neighborhood around 272 Capp Street

Hosting an Event at Noisebridge: Suggestions on how to use Noisebridge for your event/class/workshop.

Hackerspace Infos: Howtos, Background, and friendly Hackerspaces elsewhere.

Get in Touch

- Discord - Chat with us on Discord!

- Slack (requires invite)

- Press: Please see our Press Kit - Includes press contacts, pictures, background info, etc.

- Contacts - General contact details for the space

- Meetup - best up to date events list and good way to contact event hosts.

- To-do-ocracy - Help us complete our many improvements for Noisebridge! https://trello.com/b/votKcUok/lets-improve-noisebridge-pt-2-pandemic-boogaloo

- People

- IRC channel - irc://chat.freenode.net/#noisebridge

- Legal Requests (information removal, etc)

- Here is our mailing address (different from our physical address):

Noisebridge

2261 Market Street #235-A

San Francisco, CA 94114

- Or just drop by the space. New visitors should read up on getting in.

272 Capp St,

San Francisco, CA 94110

Noisebridge Lore

Noisebridge grew out of Dorkbot and Chaos Communications Camp in August, 2007. We have had regular weekly Tuesday meetings since September 2007. We rented our first physical space at 83c September 1st 2008, which we quickly outgrew, and were at our much larger 5,200 square-foot second location at [2169] Mission from September 1st 2009 to mid-2020; now at 272 Capp St. with 6,000 square feet just behind the old Mission St. location. Noisebridge was granted tax-exempt 501(c)(3) status in July 2009, retroactive to October 2008.